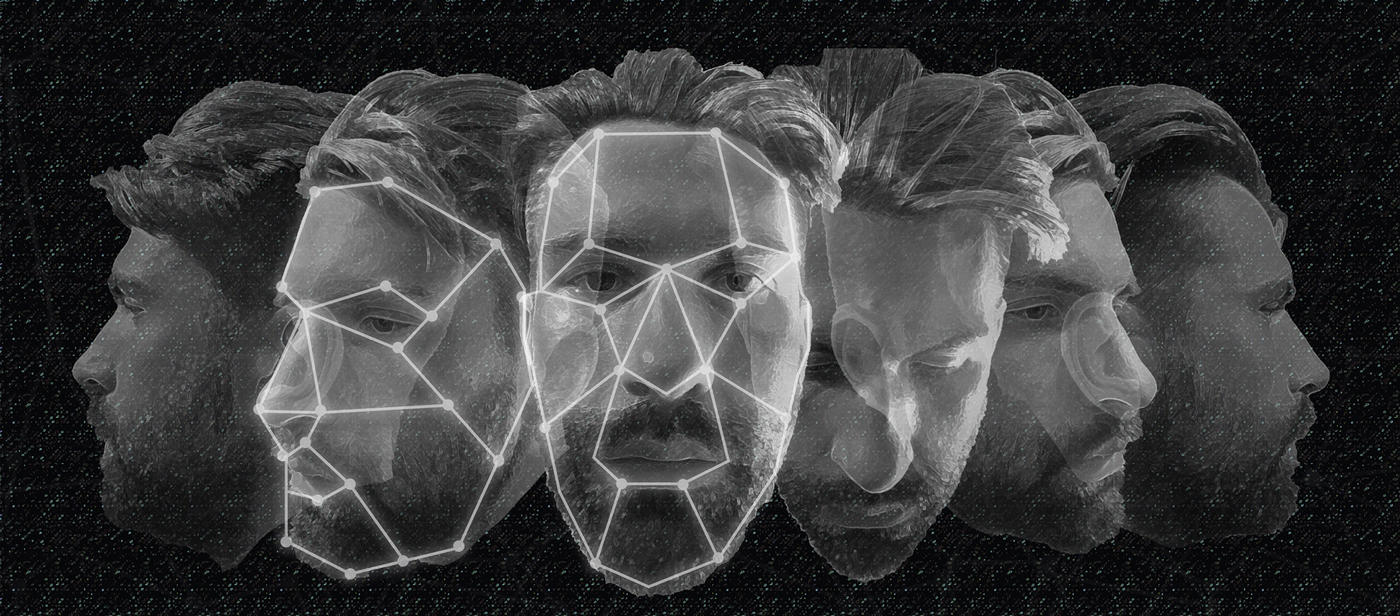

Eurostar passengers will soon have the option to go through a face verification process allowing them to walk through a “facial biometric corridor” to board a train, without requiring a passport or other travel documents. The solution is being developed by British technology company iProov in partnership with Eurostar and Canadian travel company WorldReach Software. It will first go live at London’s St Pancras International station by the end of March 2021. The Financial Times first reported this, and said that the system will be opt-in. Before travelling, passengers will have to scan their identity documents using the Eurostar app, and according to FT, will also have to upload an image of their face to verify their identity. The facial biometric check uses “controlled illumination” to authenticate a person’s identity, iProov said. The illumination also checks the image to ensure that it includes a real person rather than a photo, video, mask, or even a deepfake. After authentication, the passenger will receive a confirmation message that their identity document has been secured, following which they won’t require a physical copy of their ticket or passport until they reach their destination. For people who don’t have a smartphone, a kiosk at the rail station will allow them to carry out a similar process. There are a few things which are unclear about iProove's facial verification algorithm, such as its accuracy rate, and the database on which its facial recognition model has been trained. The problem with facial recognition tech: The announcement comes…