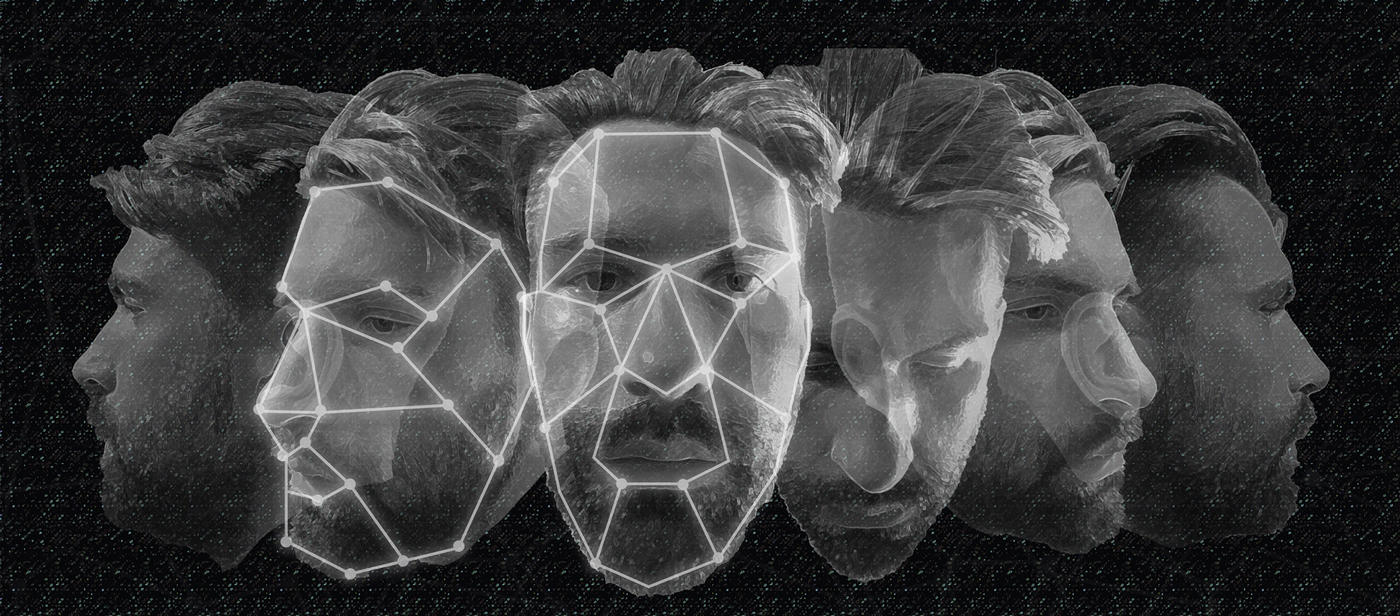

Amazon is halting police use of its controversial facial recognition system, Rekognition, for a year, the company announced in a brief blog post on June 10. The company didn’t reveal any detail about its plans with Rekognition during the moratorium period, but said it “might give Congress enough time to implement appropriate rules”, and the company “stand[s] ready to help if requested”. During the moratorium, it will continue providing the software to rights organisations such as Thorn, the International Center for Missing and Exploited Children, and Marinus Analytics. Amazon’s announcement comes just two days after IBM announced that it will stop offering “general purpose facial recognition and analysis software”. Although, unlike IBM — which raised issues of mass surveillance and racial profiling — Amazon did not offer any explicit reason about why it decided to stop selling Rekognition to the police for a year. What about law enforcement agencies that are not the police? Amazon also did not explicitly specify whether it will pause sales to other law enforcement agencies during the moratorium. This is important because Rekognition is already licensed by a number of law enforcement agencies in the US. It is also not clear whether Amazon will stop developing the facial recognition system during the moratorium. It is also possible that this moratorium doesn’t mean much, especially since the company has never revealed how many police departments use its service. In fact, until last year, only one police department in Oregon was reportedly using it, but even that stopped. Also,…