“There is palpable, real-world harm that social media platforms are doing in India and there isn’t actually any concommitant acknowledgement of the harm that is being done or any kind of similar urgency to address these harms in India,” Ruchi Gupta, co-founder of the Future of India Foundation (FOI), said at a press conference on May 5, while announcing the launch of the foundation’s report on the ‘Politics of Disinformation’.

The report highlighted issues around disinformation on social media platforms, the impact that it has on users, what the platforms currently do to moderate content, and more. It also suggested alternate measures to deal with social media disinformation such as a transparency law for platforms, chronological feeds as the default setting, removing trends and others.

FOI had also testified before the Parliamentary Standing Committee on Communications and Information Technology last month on issues of citizen’s rights and preventing misuse online.

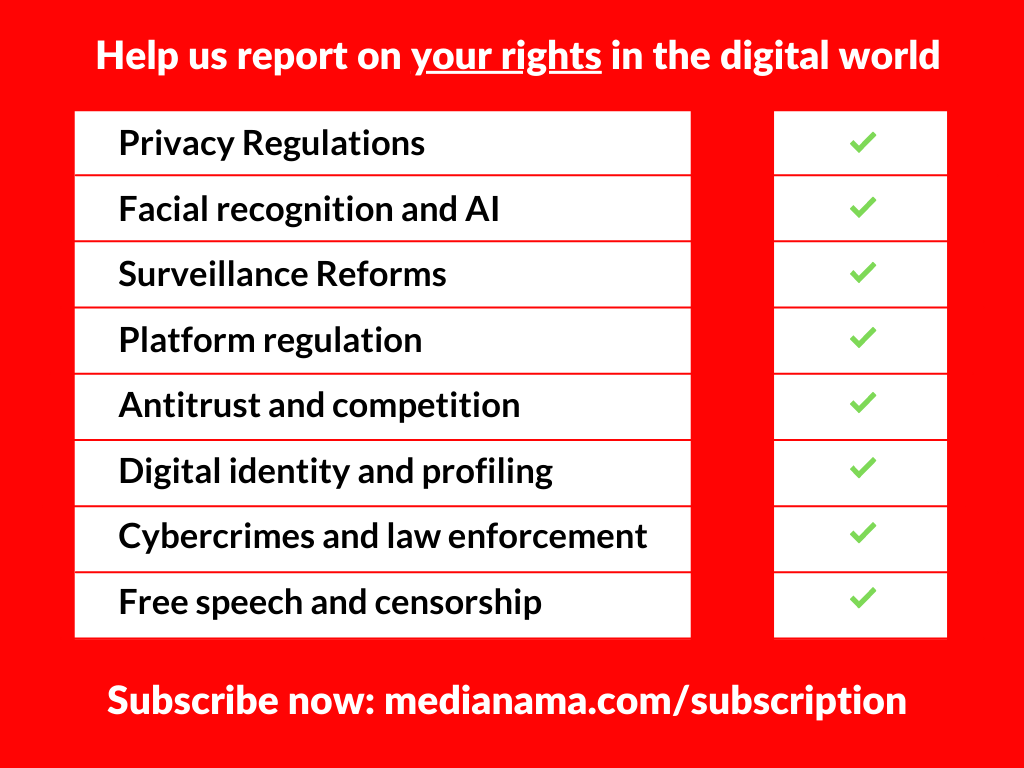

Dear reader, we urgently need to build capacity to cover the fast-moving tech policy space. For that, our independent newsroom is counting on you. Subscribe to MediaNama today, and help us report on the policies that govern the internet.

Key takeaways from the report

“Future of India foundation has been set up primarily to deal with the big picture youth issues. But one of the reasons we thought that this particular issue was very important is because young people generally enter into the democratic space through the public discourse..But it is no longer playing that role. Instead of educating young people, instead of providing them that space of engagement in the democratic space through the democratic process, public discourse is now providing space for hate, bigotry, misinformation.” — Ruchi Gupta

The report is based on research conducted through youth focus groups in eight different States and analysis of media reports, FOI said. Here are some of its recommendations:

Introducing a transparency law: A comprehensive transparency law is required to be enacted in India. The Ad Library by Facebook doesn’t facilitate easy understanding and prompt responses. Certain kinds of data sharing also raises privacy concerns, the report said.

“Ad library, which is a whole sort of mechanism that claims that we (Meta) are transparent, however it’s very difficult to do research on it unless you know the tools to scrape data, etc. It’s aggregated in a way that you can’t really find out who is funding these ads, who’s funding this speech, etc. Similarly, the transparency reports that Facebook releases of content take down- A lot of times they aggregate at the global level. At the country level, and specific global level, you don’t realize how different regulations are working.”— Kumar Sambhav, a journalist who also worked on the report

Subjecting content moderation to parliamentary oversight: There is a lack of transparency and legitimacy in leaving content moderation to executives of private companies, the report said. It also acknowledge the risks to free speech and democratic dissent in leaving content moderation to the government. Hence, the report proposed the creation of a statutory regulator under parliamentary oversight to manage issues related to content moderation on platforms. Such a regulator would have powers to:

- Lay out broad processes for governance of speech

- Set standards for transparency for social media platforms (within the framework of the suggested transparency law) and audit social media platforms for compliance with it

- Develop a point of view on key misinformation themes/events in the country especially on issues which have immediate public policy implications e.g., public health misinformation on COVID-19

Putting labels on trustworthiness of content: Users or accounts which have repeatedly put out information that has been debunked by fact-checkers, is borderline, or is frequently unoriginal, could be given labels like ‘low credibility’ the report suggested. This, the report said, will be significant because studies have found that a small subset of sites/handles are responsible for bulk of misinformation on platforms.

Platforms should choose between amplification and distribution: Platforms are currently consistently violating their own content policies by amplifying certain types of content, the report said. Giving an example of such amplification, Gupta said:

“Now, platforms in a bid to increase user engagement and time spent on the platform, they are starting to curate content, meaning they’re starting to amplify. They’re starting to push new content into users’ feeds from people they don’t directly follow; and now, platforms are starting to directly pay creators for content which will be exclusive to that particular platform.”

According to the report, platforms should select one among three approaches to amplifying content on their platforms :

- A hands-off approach which involves constraining reach of content to organic reach through chronological feeds which should be kept as the default option, and restricting a user’s access only to content they have specifically opted to receive.

- Taking responsibility for amplified content and exercising clear editorial choice

- Amplifying content providers who have gone through a vetting process to ensure some integrity and quality of content. Certain content providers should be vetted for their compliance with ‘criteria of due process’, the report said. This would especially apply to those creating political content, to ensure integrity and trustworthiness. Further, it would create incentives for more ‘good actors’ to emerge who comply with these criteria. The report also suggested using community-based classification of ‘reputation’ and ‘consensus’ to create a list of ‘good actors’.

Holding very popular users to a different standard: Given that certain users can gain huge following by repeatedly posting false, inauthentic content, etc., stringent content guidelines can be made especially for them. Repeated violations of these guidelines should also lead to them being deplatformed, the report said.

Ensuring investments in integrity are proportional to userbase in a region: Platforms should ensure that they are able to understand the local context, languages, etc., while doing content moderation. Their integrity teams should be increased in size, pursuant to their user bases in a region, as opposed to their revenues since the latter penalizes large but developing countries, the report said.

Removing incentives for engagement over quality of content: Features such as trending topics and engagement numbers on posts could be eliminated by platforms to reduce the incentives for posting polarising, false content, the report said. Platforms end up encouraging such content through amplification algorithms which are predicated on engagement instead of quality and the signaling of high engagement as a driver of importance of a piece of content, it added.

Making fact-checks more effective and valuable: While misinformation spreads rapidly, fact-checks do not get similar targeted distribution, the report said. It also suggested methods on how platforms could improve the targeted distribution of fact-checks:

- Expand fact-checking capacity to include all languages above a certain threshold of users

- Work with relevant stakeholders to develop specific measures to reduce disinformation during elections and ensure parity of prioritisation for India and Global South with US 2020 elections

- Translate fact-checked information into regional languages for accessibility

- Push fact-checked information into user feeds and targeted to individuals who have shown prior interest or engagement with related topics (to increase likelihood that users exposed to disinformation will see fact-checks)

- Recruit “Fact Ambassadors” for distribution of fact-checked information. This will improve distribution and reception of fact-checked information. It will also provide social media platforms with valuable feedback on information consumption behaviour

- Use AI to provide representative counterview by surfacing links to good quality news reports and comments. Some platforms like Facebook, YouTube, and Google are already doing this and this measure can be expanded to cover all content above a certain threshold of engagement

- Provide crowdsourcing channels to flag content that is going viral, is time-sensitive, and may lead to real-world harm for real-time fact-checking and proactive notification to impacted users (this is already being done in some cases)

Scaling digital literacy initiatives: Digital literacy initiatives are undertaken as a measure to combat misinformaiton online; however, the report said that platforms have restricted these initiatives to public relations exercises. These initiatives should be linked with targets that are consonant with the platforms’ users bases so that a ‘critical’ portion of such users undergo such initiatives, the report said.

“Facebook or Twitter or WhatsApp have- I don’t know- 350 to 400 million users or something? And their digital literacy workshops reach a thousand or something. So one of the recommendations we made is that your digital literacy efforts have to be in some way commentaries and proportional to your user base site,” Gupta said, during the press conference.

What’s the IT Standing Committee up to?

The Parliamentary Standing Committee on Communications and Information Technology has been looking into the subject of ‘safeguarding citizens’ rights and prevention of misuse of social/online news media platforms including special emphasis on women security in the digital space’. Besides FOI, the committee has also heard from representatives of Meta and the Ministry of Electronics and Information Technology on the matter.

In December 2021, WhatsApp’s public policy director Shivnath Thukral briefed the committee about its approach to content and user privacy on the platform, MediaNama had reported. The committee members also asked Meta about allegations from whistleblowers about the company’s content moderation practices, which Thukral reportedly brushed aside by referring to the whistleblowers as ‘disgruntled employees’. However, committee members had been satisfied with Meta’s responses and had asked it to reappear before the committee with written responses.

Earlier, the committee had submitted a request asking Lok Sabha Speaker Om Birla to let it summon Facebook whistleblower Sophie Zhang, who is based in the US, to depose before the committee. In-person testimony by witnesses from abroad requires the Speaker’s consent. However, according to reports, Birla has still not responded to the request.

This post is released under a CC-BY-SA 4.0 license. Please feel free to republish on your site, with attribution and a link. Adaptation and rewriting, though allowed, should be true to the original.

Also Read:

- Summary: What are the new Santa Clara Principles on transparency from social media platforms?

- MIB blocks twenty-two YouTube channels for spreading fake news

- Here’s what the IT Parliamentary Standing Committee is set to discuss in 2021-22

Have something to add? Subscribe to MediaNama here and post your comment.