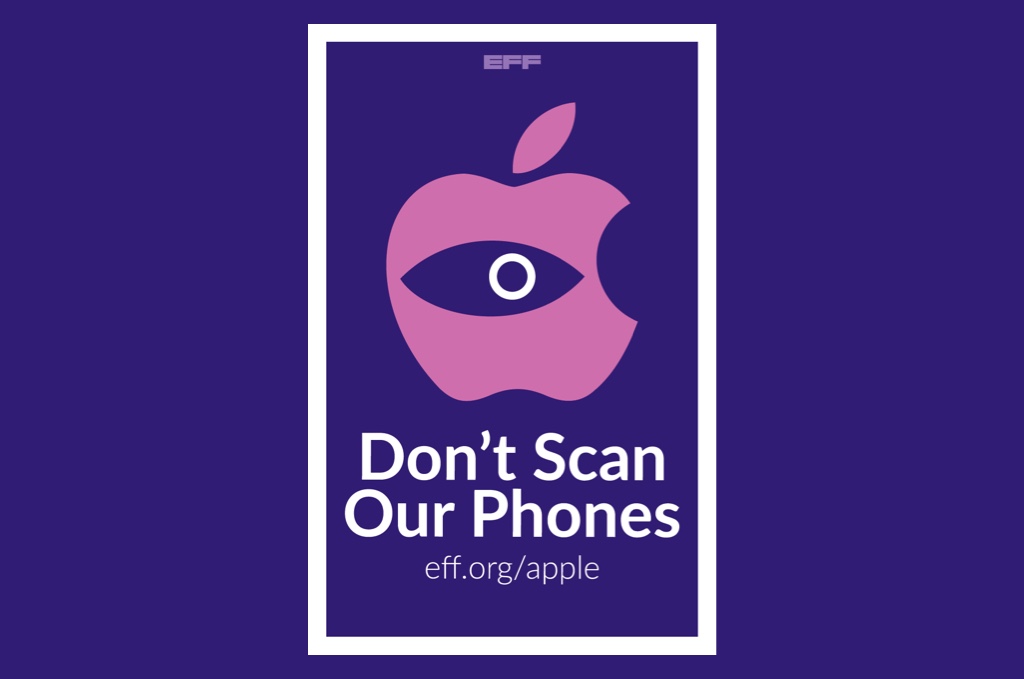

After facing pressure from privacy advocates over its new child safety features, Apple on September 3 said that it will delay its implementation, but advocates are asking Apple to abandon the plan entirely. These features have come under heavy criticism because many believe that the same technologies can be used by governments for censorship or by parents to undermine the privacy and freedom of expression of children. Nation-wide protest planned in the US Activists from the Electronic Frontier Foundation (EFF), Fight for the Future, and other civil liberties organizations have called for a nationwide protest in the US against Apple's "plan to install dangerous mass surveillance software." The protest is scheduled for September 13, a day before Apple's big iPhone launch event. "EFF is pleased Apple is now listening to the concerns of customers, researchers, civil liberties organizations, human rights activists, LGBTQ people, youth representatives, and other groups, about the dangers posed by its phone scanning tools. But the company must go further than just listening, and drop its plans to put a backdoor into its encryption entirely," the organisation said. "There is no safe way to do what Apple is proposing. Creating more vulnerabilities on our devices will never make any of us safer."- Caitlin Seeley George, campaign director at Fight for the Future On September 8, privacy advocates submitted nearly 60,000 petitions to Apple. Previously, on August 19, an international coalition of 90+ civil society organizations wrote an open letter to Apple, calling on the company to abandon its plans. "We are concerned that they will…