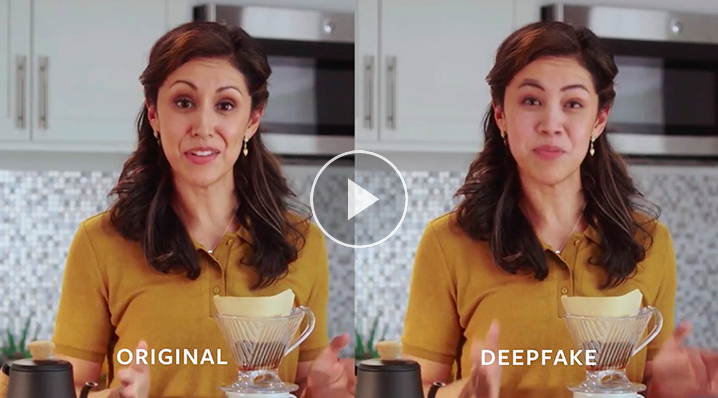

Deepfakes can help authoritarian ideas flourish even within a democracy, enable authoritarian leaders to thrive, and be used to justify oppression and disenfranchisement of citizens, Ashish Jaiman, director of technology and operations at Microsoft said on Thursday. “Authoritarian regimes can also use deepfakes to increase populism and consolidating power”, and they can can also be very effective to nation states to sow the seeds of polarisation, amplifying division in the society, and suppressing dissent, Jaiman added, while speaking at ORF-organised cybersecurity conference CyFy. Jaiman also pointed out that deepfakes can be used to make pornographic videos, and the target of such efforts will “exclusively be women”. Deepfakes are realistic imitations, usually in video or audio form, of people speaking. While some uses of the technology can be innocuous — adding your face to a friend’s face for a comedic effect for instance — deepfakes can be potentially used to run propaganda campaigns. Almost all major social media platforms — Facebook, Twitter, YouTube — have policies in place to discourage the propagation of deepfakes, in one way or the other, albeit with varying degrees of stringency. How deepfakes can affect democracies “Deepfakes can undermine trust in institutions and diplomacy, act as a powerful tool by malicious nation states to undermine public safety and create uncertainty and chaos,” Jaiman said. “Imagine a deepfake of a diplomat spewing damaging remarks for a country's leader”, he added. Jaiman also laid out the many ways in which deepfakes can potentially affect democracies: Alter democratic discourse:…