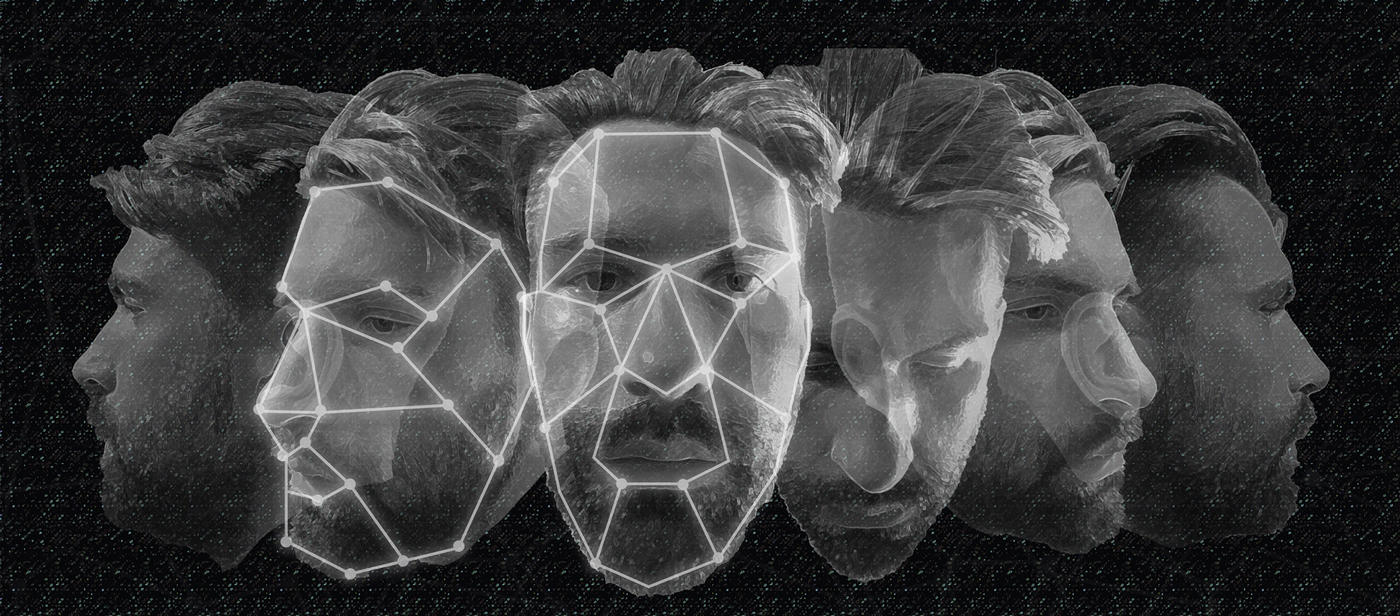

Boston has banned the use of facial recognition technology by the city’s government, and prohibited any city official from obtaining the technology from third parties. This came after the city’s council, on Wednesday, unanimously voted in support of an ordinance sponsored by councillors Michelle Wu and Ricardo Arroyo. The measure will now go to the Boston’s governor to be signed into a law. With this, Boston joins cities such as San Francisco, Oakland, Cambridge, Berkley, and Somerville, which have already banned government use of the technology. The ban comes in the backdrop of the arrest of a black man, Robert Julian-Borchak Williams, following an incorrect facial recognition match. Williams was accused of a shop theft that took place in October 2018, a still from the shop’s surveillance cameras was uploaded to Michigan state’s facial recognition database. This led to Williams’ picture being included in a photo lineup that was shown to the store’s security guard, who identified Williams as the culprit. However, during the interrogation, Williams held up a photo of the shoplifter next to his own face, following which one of the detectives said that “the computer must have gotten it wrong,” Williams wrote for Washington Post, recounting the incident. Williams’ might well be the first incident where am incorrect facial recognition match led to real world consequences for an individual, but chances are, it won’t be the last. “Boston should not use racially discriminatory technology that threatens the privacy and basic rights of our residents. This ordinance codifies our values that community…