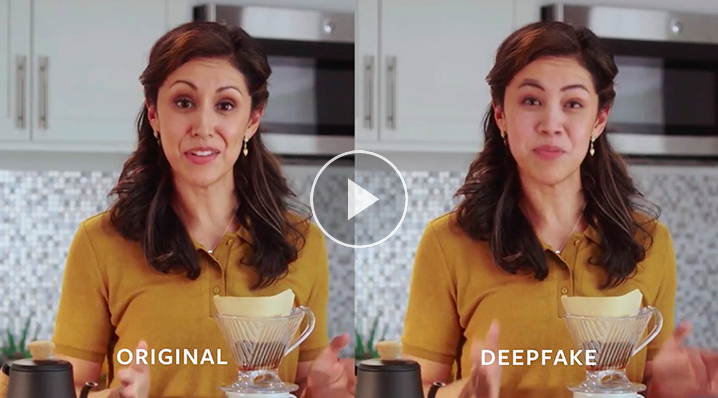

Earlier this month, California state in the United States signed two bills that ban political deepfakes during elections and non-consensual pornographic deepfakes. The Bills, respectively known as AB 730 and AB 602, were signed into law by Governor of California Gavin Newsom, on October 3. Ban on political deepfakes Political deepfakes garnered attention earlier this year after a doctored video of US House Speaker Nancy Pelosi went viral depicting her as drunk and slurring. California’s ban on political deepfakes is aimed at tackling voter manipulation ahead of the 2020 US Presidential elections. The law will remain in force till January 1, 2023, unless extended. The law bans deepfakes on campaign material relating to any political candidate. It also bans any other intentionally manipulated image, audio, or video of the candidate’s appearance, speech, or conduct. Ban on deepfakes in campaign material: The law prohibits a person or entity (including a campaign committee) to produce, distribute, publish, or broadcast campaign material that superimposes a person’s photograph over a candidate’s (or the other way around) - while knowing or turning a blind eye to the fact that doing so will cause false representation. This provision covers only campaign material where pictures or photographs are used, and is applicable for elections to any public office. But there's an exception: If the material is accompanied by a disclaimer stating that “This picture is not an accurate representation of fact” in the biggest font size used in the whole campaign material, then this ban will not…