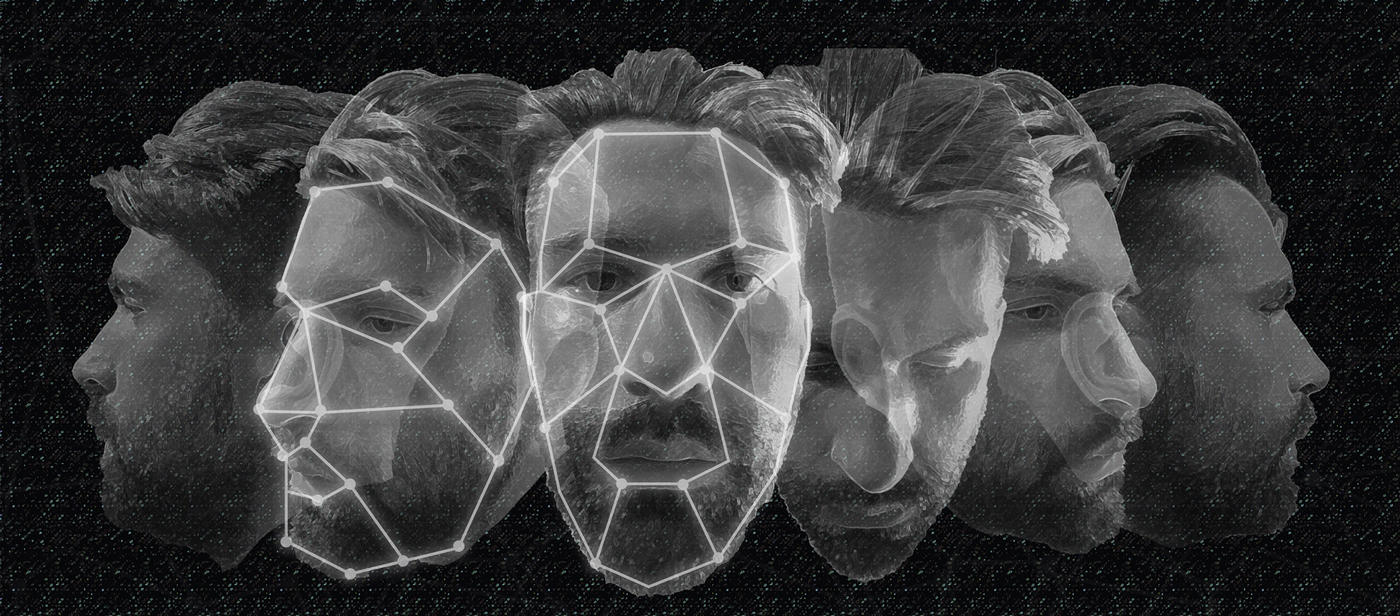

Google has released “a large dataset” of visual deepfakes that it created to aid researchers “to directly support deepfake detection efforts”, the company said on September 26. Deepfake videos are highly convincing AI-generated videos of real people and events that can potentially be used for disinformation campaigns. Why this matters: Deepfake videos are becoming more sophisticated with time. In 2018, Indian journalist Rana Ayyub’s face was implanted on a pornographic video which was later shared thousands of times. Deepfakes, when made of public officials, have the potential to change the course of elections and adversely impact public discourse. Read this report by The Guardian to understand how disconcerting this technology can be. On top of that, this technology is becoming more readily available with time. Face-swapping app Zao, which is available to everyone, can render highly convincing deepfake videos by using just a single image. How did Google create these deepfakes? It worked with paid and consenting actors to record hundreds of videos and then created thousands of deepfakes by using publicly available deepfake generation methods. Is someone else working to address the issue of deepfakes? Yes. Facebook and Microsoft announced the Deepfake Detection Challenge wherein they will release a data set of videos and deepfakes that can be used by the community to develop technology to detect them. Go Deeper: Just in case you were wondering, here is a highly convincing deepfake video of Mark Zuckerberg. Also, here is a video we did on the problems with Zao and…